Spam Filtering using Naive Bayes Classifier

I. Overview of Naive Bayes

Naive Bayes is a probabilistic classification method based on the Bayes Theorem. In general, the Bayes Theorem gives the relationship between the probabilities of two events and their conditional probability. The classifier assumes that the presence or absence of a particular feature of a class is unrelated to the presence or absence of other features.

Since, Naive Bayes classifiers are easy to implement and can execute efficiently even without prior knowledge of the data, they are among the most popular algorithms used for classifying the text documents. One of the best example of Naive Bayes implementation is the Spam Filtering. Bayesian spam filtering has become a popular mechanism to distinguish spam e-mail from the legitimate e-mail.

II. Example Use-Case

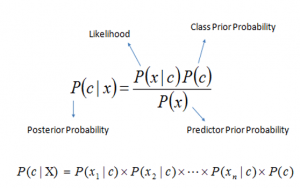

Bayes theorem provides a way of calculating the posterior probability, P(c|x), from P(c), P(x), and P(x|c). Naive Bayes classifier assume that the effect of the value of a predictor (x) on a given class (c) is independent of the values of other predictors. This assumption is called class conditional independence.

- P(c|x) is the posterior probability of class (target) given predictor(attribute).

- P(c) is the prior probability of class.

- P(x|c) is the likelihood which is the probability of predictor given class.

- P(x) is the prior probability of predictor.

Let’s understand the Naive Bayes Classifier in a simpler term; Consider that you are working as a Security Guard at a Airport, your task is to look at the people who pass the security line and pick some of them for a detailed checking. Initially, you assign a ‘risk value’ for each person and at the beginning you set this value to Zero.

Now you start studying various features of the person in front of you: Is it a male or a female? Is it a kid? Is he behaving nervously? Is he carrying a big bag? Is he alone? Did the metal detector beep? Is he a foreigner? and others. For each of these features you increase or decrease the risk value of the person being a criminal. For example, if you know that the proportion of males among criminals is the same as the proportion of males among non-criminals, observing that a person is male will not affect his risk value at all. If, however, there are more males among criminals (suppose the percentage is, say, 70%) than among decent people (where the proportion is around 50%), observing that a person in front of you is a male will increase the “risk level” by some amount (the value is log(70%/50%) ~ 0.3, to be precise).

You can continue this as long as you want, including more and more features, each of which will modify your total risk value by either increasing it (if you know this particular feature is more representative of a criminal) or decreasing (if the features is more representative of a decent person). When you are done collecting the features, all is left for you is to compare the result with some threshold level. Say, if the total risk value exceeds 10, you declare the person in front of you to be potentially dangerous and take it into a detailed checking.

Other possible application’s of Naive Bayes Include;

- News Categorization

- E-mail Spam Detection

- Face Recognition

- Sentiment Analytic

- Medical Diagnosis

- Fraud Detection

III. Conclusion

Naive Bayes is easy to build and particularly useful for very large data-sets. Along with simplicity, Naive Bayes is known to outperform even the most-sophisticated classification methods. On the flip side, the Naive Bayes is known as ‘Bad Estimator’.