Pentaho Upgrade Comparison

Up to 40% of security administrators don’t prioritize updating their software. So it’s no big surprise that the average software user doesn’t upgrade as often as they should either.

However, with the worldwide release of so many large ransom ware attacks like WannaCry in the last few years, we should all take upgrading more seriously. Fear mongering aside, there are actually some great benefits to upgrading software when new versions are released.

- Why Pentaho Upgrade

- Challenges and Impacts

- Hardware Requirements

- Advantages of Upgrade

- Features Briefing

1. Why Pentaho Upgrade ?

The truth is that technology will always require upgrades. Consumer demand for new features, changes to government compliance and reporting, and the desire for things to run faster are few among the reasons why businesses will always need upgrades.

Here are the top reasons why you should always be upgrading your software to the latest version.

2. Challenges and Impacts

Loss of Productivity

Spending more time on fixing the issues and maintaining the solution, and convincing the business users with patch fixes, not able to add more value

Compliance Issues

Not able to pass through the internal IT Audit and compliance needs (security audit, privacy policies) due to obsolete stack?

Compromised Support

Vendor lacking support options and availability issues, difficulty in meeting deadlines in case of product issues.

Performance Optimization

Becoming impatient over time due to slow execution of jobs , under utilization of system resources,

Unstable Connectivity

Incomplete report and dashboards due to intermittent loss in connection, no new features to connect with latest source/target databases

Eco System Enhancement

Always worried about the older ecosystem, most of the system resources are under utilised, difficulty in running jobs remotely. unsupported plugins due to older OS version, Java version and other dependent software’s.

Competitive Disadvantage

Older versions lack in features that will help one to easily build and generate reports and dashboards, with older version ability to better forecast with new features available

Ease of Maintenance

Maintenance becoming an overhead job, spending much time in maintenance rather than using the application, increased cost in handling the resources.

Security Implications

Support and maintenance of old versions become tough to be accessible, IT professionals can’t support technology that has become too old. Young IT professionals may have limited knowledge of old systems

Compatibility issues

Struggling to implement business solution with unsupported applications, less availability of suitable plugins drivers etc. for logic implementing.

Hindered Innovation

Difficulty in implementing new logic for business solution, limiting to specified implementation of solution due to lack of new technological advancement.

Value add features

Lack of new features added, Missing out on a new feature that could help to simplify a process, Cannot compete to sustain.

Security Upgrades

Ageing systems are not actively security-checked, businesses using obsolete versions are vulnerable to having information stolen or compromised. Newer technology has better security checking in place.

New Stack Adaption

Getting stuck in the traditional way of doing things, keeping track of all the new tools available for your stack can be overwhelming.

Continued Support

Security vulnerable to human malice, vulnerable to system failure due to Outdated software.

Research & Development

Businesses using older technology won’t have the functionality that could give them a competitive advantage, new operating systems can be used to provide enhancements that weren’t possible in older systems.

Efficiency

Delayed response time due to old core logic of the solution, slow responsiveness causes delayed output. End user has to wait for long time leaving them dissatisfied, dissatisfied customer means risk to business

IT Support

New Resources have limited knowledge about older business solution, long time to understand the solution to resolve an issue. Increased Support cost.

Data Inaccuracy

Older logics implemented, these logics/formulae does not yield the accurate results in the current time. Small change in the decimals means huge impact on business need

Malware Risks

Older version are easy prey to hackers, new software tackles/prevents the latest security vulnerabilities of operation

Being trustworthy

Advancing through multiple version changes, the software itself becomes more trustworthy among its users especially towards error correction and keeping abreast of changes within technologies.

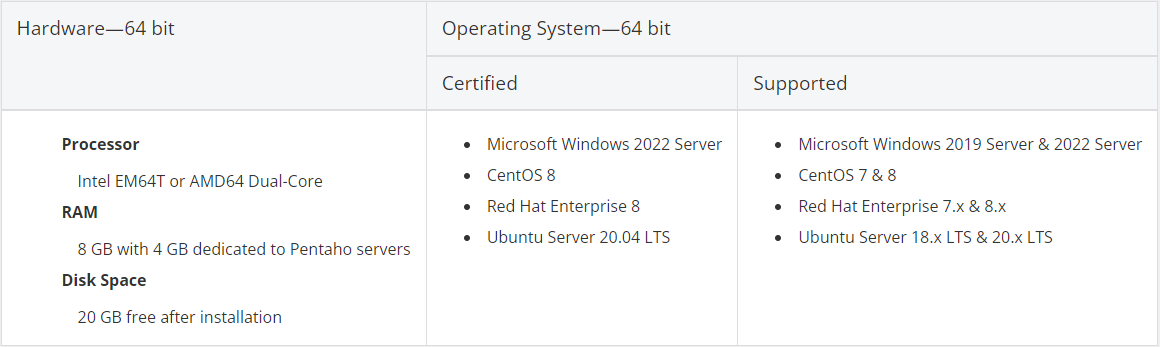

3. Hardware Requirements

4. Advantages of Pentaho Upgrade

With upgrades often comes many benefits. Your business could be missing out on a new feature that could help to simplify a process, create insight into data that may not have been accessible prior and more.

Old and outdated software is vulnerable to hackers and cyber criminals as updates keep you safe from exploitable holes into your organization. Having reliable security in place is especially important as the release of software update notes often reveal the patched-up exploitable entry points to the public.

Upgrading systems sounds expensive, however, the truth is that older systems have more issues – and consequently more costs. The cost of disruption caused by unstable systems and software can very quickly escalate to more than it would cost to invest in an upgraded system. Don’t put your business at risk by failing to upgrade your software or you may regret it.

The following table show the list of features that comes with the following advantages one can enjoy with upgrade

| CATEGORY | FEATURES | PENTAHO 8.3 | PENTAHO 9.0 | PENTAHO 9.3 |

| Compliance/ Security | Java 11 | NO | NO | YES |

| Compliance/ Security | Visualization API 3.0 support | NO | NO | YES |

| Compliance/ Security | Security improvements | NO | NO | YES |

| Compliance/ Security | Component upgrades | NO | NO | YES |

| Compliance/ Security | Increased AWS S3 Security | YES | YES | YES |

| PERFORMANCE | Improved Streaming Steps in PDI | YES | YES | YES |

| PERFORMANCE | Increased Spark Capabilities in PDI | NO | YES | YES |

| PERFORMANCE | Data Integration Improvements | YES | YES | YES |

| PERFORMANCE | Business Analytics Improvements | YES | YES | YES |

| PERFORMANCE | PDI lineage improvements | NO | YES | YES |

| PERFORMANCE | Analyzer improvements | NO | NO | YES |

| PERFORMANCE | Interactive Reports improvements | NO | NO | YES |

| ENHANCEMENTS | Google Cloud Data Enhancements | YES | YES | YES |

| ENHANCEMENTS | Create Snowflake warehouse | YES | YES | YES |

| ENHANCEMENTS | Modify Snowflake warehouse | YES | YES | YES |

| ENHANCEMENTS | Delete Snowflake warehouse | YES | YES | YES |

| ENHANCEMENTS | Start Snowflake warehouse | YES | YES | YES |

| ENHANCEMENTS | Stop Snowflake warehouse | YES | YES | YES |

| ENHANCEMENTS | AEL enhancements | YES | YES | YES |

| ENHANCEMENTS | PDI expanded metadata injection support | YES | YES | YES |

| ENHANCEMENTS | Stored VFS connections | YES | YES | YES |

| ENHANCEMENTS | Python list support | YES | YES | YES |

| ENHANCEMENTS | Snowflake enhancements | NO | YES | YES |

| ENHANCEMENTS | Amazon Redshift enhancements | NO | YES | YES |

| ENHANCEMENTS | Analyzer enhancements | NO | NO | YES |

| ENHANCEMENTS | VFS connection enhancements and S3 support | NO | YES | YES |

| ENHANCEMENTS | AMQP and Kinesis improvements | NO | YES | YES |

| ENHANCEMENTS | JMS Consumer improvements | NO | YES | YES |

| ENHANCEMENTS | Text File Input and Output improvements | NO | YES | YES |

| ENHANCEMENTS | Analyzer improvements | NO | YES | YES |

| ENHANCEMENTS | Dashboard Designer improvements | NO | YES | YES |

| ENHANCEMENTS | MongoDB improvements | NO | NO | YES |

| ENHANCEMENTS | Reduced Pentaho installation download size and improved startup time | NO | NO | YES |

| ENHANCEMENTS | Query performance improvement | NO | NO | YES |

| VALUE ADD FEATURES | New and Updated Big Data Steps | YES | YES | YES |

| VALUE ADD FEATURES | Access Snowflake as a data source | YES | YES | YES |

| VALUE ADD FEATURES | Snowflake Plugin for PDI | YES | YES | YES |

| VALUE ADD FEATURES | Bulk load into Snowflake | YES | YES | YES |

| VALUE ADD FEATURES | Pentaho Server Upgrade Installer | YES | YES | YES |

| VALUE ADD FEATURES | New Amazon Redshift bulk loader | YES | YES | YES |

| VALUE ADD FEATURES | Kinesis Consumer and Producer | YES | YES | YES |

| VALUE ADD FEATURES | Access to HCP from PDI | YES | YES | YES |

| VALUE ADD FEATURES | Access to multiple Hadoop clusters from different vendors in PDI | NO | YES | YES |

| VALUE ADD FEATURES | Step-level Spark tuning in PDI transformations | NO | YES | YES |

| VALUE ADD FEATURES | Copybook input | NO | YES | YES |

| VALUE ADD FEATURES | Read metadata from Copybook | NO | YES | YES |

| VALUE ADD FEATURES | PDI expanded metadata injection support | NO | YES | YES |

| VALUE ADD FEATURES | Docker container deployment | NO | NO | YES |

| VALUE ADD FEATURES | Improved handling of daylight savings when scheduling reports | NO | NO | YES |

| VALUE ADD FEATURES | Enable or disable report options at the server level | NO | NO | YES |

| VALUE ADD FEATURES | Select parameter values when scheduling reports | NO | NO | YES |

| VALUE ADD FEATURES | Drill up or down a dimension | NO | NO | YES |

| VALUE ADD FEATURES | Export an Analyzer report as a JSON file through a URL | NO | NO | YES |

5. Features Briefing

1) Improved Streaming Steps in PDI

Improved access to data stored in datasources

2) Increased Spark Capabilities in PDI

The Avro and Parquet steps can be used in transformations running on the Kettle engine or the Spark engine via Adaptive Execution Layer (AEL).

3) Data Integration Improvements

PDI includes improvements in AEL for Spark, including enhancements to work with the Apache Spark Dataset API. PDI includes Spark-related improvements to two steps: Switch-Case and Merge rows (diff).

4) Business Analytics Improvements

Visualization API 3.0 is now supported. Visualization API 3.0 provides a simple, powerful, tested, and documented approach to develop new visualizations and configure visualizations.

5) PDI lineage improvements

newly added custom analyzer steps to support data lineage tracking

6) Analyzer improvements

enhancements to Analyzer for an improved user experience when exporting reports,

7) Interactive Reports improvements

You now can Search for fields via the Find text input box.

Disable and enable the Select distinct option in the Query Settings dialog box by default.

8) Google Cloud Data Enhancements

Pentaho Data Integration (PDI) now stores VFS connection properties so you can use the connection information whenever you want to access your Google Cloud Storage or Hitachi Content Platform content

9) Create Snowflake warehouse

The Create Snowflake warehouse entry creates a new Snowflake virtual warehouse from within a PDI job. This entry builds the warehouse with the attributes you specify

10) Bulk load into Snowflake

The Bulk load into Snowflake job entry in PDI loads vast amounts of data into a Snowflake virtual warehouse in a single session. For more information about working with Snowflake in PDI

11) Modify Snowflake warehouse

Modify Snowflake warehouse job entry to edit warehouse settings. For more information about working with Snowflake in PDI

12) Delete Snowflake warehouse

The Delete Snowflake warehouse job entry deletes/drops a virtual warehouse from your Snowflake environment. Dropping unwanted virtual warehouses helps you clean up the Snowflake management console from PDI

13) Start Snowflake warehouse

The Start Snowflake warehouse entry starts/resumes a virtual warehouse in Snowflake from PDI.

14) Stop Snowflake warehouse

The Stop Snowflake warehouse entry stops/suspends a virtual warehouse in Snowflake from PDI. For more information about working with Snowflake in PDI

15) AEL (adaptive execution layer) enhancements

PDI expanded metadata injection support

Metadata injection enables the passage of metadata to transformation templates at runtime to drastically increase productivity, reusability, and automation of transformation workflow. With this ability, you can support use cases like the onboarding of data from many files and tables to data lakes

Stored VFS connections

Pentaho Data Integration (PDI) now stores VFS connection properties so you can use the connection information whenever you want to access your Google Cloud Storage or Hitachi Content Platform content

Python list support

The Python Executor step now supports a Python list of dictionaries as a data input type. This new input type for All Rows processing converts each row in a PDI stream to a Python dictionary and all the dictionaries are then put into a Python list.

Snowflake enhancements

With the Pentaho Snowflake plugin, you can now use PDI to manage additional Snowflake tasks, including bulk loading data into a Snowflake data warehouse.

Amazon Redshift enhancements

The Bulk load into Amazon Redshift entry is now available in Pentaho Data Integration to enable greater productivity and automation while populating your Amazon Redshift data warehouse, eliminating the need for repetitive SQL scripting

16) VFS connection enhancements and S3 support

A new Open dialog box to access your VFS connections, which includes support for S3, Snowflake staging (read-only), Hitachi Content Platform, and Google Cloud Storage

17) Text File Input and Output improvements

You can set up the Text File Input step to run on the Spark engine using AEL. Additionally, the Header option in the Text file output step now works on Spark

18) Analyzer improvements

You can now display column totals at the top and row totals on the left for Analyzer reports

19) Dashboard Designer improvements

You can now export Analyzer reports as PDFs, CSVs , or Excel workbooks.

20) MongoDB improvements

You can now use MongoDB Atlas string format to connect Pentaho to MongoDB. With this type of string, you no longer need to specify all your cluster members in the connection

21) Reduced Pentaho installation download size

The download size of the Pentaho installation is reduced by almost half, leading to a smaller footprint and quicker download time

22) Improved Pentaho startup time

Pentaho’s start time is also significantly improved now that only your specific drivers are loaded at startup instead of the entire set

23) Query performance improvement

Improved Analyzer’s caching of queries to increase performance.

24) Access Snowflake as a data source

You can now connect to Snowflake as a Pentaho data source. Snowflake is a fully relational ANSI SQL data warehouse-as-a-service running in the cloud. You can now use your Snowflake data in ETL activities with PDI or visualize data with Analyzer, Pentaho Interactive Reports, and Pentaho Report Designer

25) Snowflake Plugin for PDI

With the Pentaho Snowflake plugin, you can now use PDI to manage additional Snowflake tasks, including bulk loading data into a Snowflake data warehouse.

26) New Amazon Redshift bulk loader

The Bulk load into Amazon Redshift entry is now available in Pentaho Data Integration to enable greater productivity and automation while populating your Amazon Redshift data warehouse, eliminating the need for repetitive SQL scripting

27) Kinesis Consumer and Producer

Kinsesis Consumer and Kinesis Producer transformation steps leverage the real-time processing capabilities of Amazon Kinesis Data Streams from within PDI.

28) Access to HCP from PDI

Hitachi Content Platform (HCP) is the distributed, object data storage system from Hitachi Vantara that provides a scalable, easy-to-use repository that can accommodate all types of fixed-content data from simple text files to images and video to multi-gigabyte database images.

29) Access to multiple Hadoop clusters from different vendors in PDI

You can now access and process data from multiple Hadoop clusters from different vendors, including different versions, through drivers and named connections within a single session of PDI.

30) Step-level Spark tuning in PDI transformations

Step-level tuning can improve the performance of your PDI transformations executed on the Spark engine

31) Copybook input

Reads the mainframe binary data files that were originally created using the copybook definition file and outputs the converted data to the PDI stream for use in transformations.

32) Read metadata from Copybook

Reads the metadata of a copybook definition file to use with ETL metadata injection in PDI.

33) PDI expanded metadata injection support

New metadata injection example is included in the 9.0 Pentaho distribution.

34) Docker container deployment

You can now create and deploy Docker containers of Pentaho products with a command line tool

35) Improved handling of daylight savings when scheduling reports

The new Ignore daylight saving adjustment option permits your report to run at least once every 24 hours, regardless of daylight saving.

36) Enable or disable report options at the server level

The new Show Empty Rows where the Measure cells is blank property improves performance by avoiding cross-joins.

37) Select parameter values when scheduling reports

You can now add parameters values when scheduling an Analyzer report from Pentaho User Console and set default values at the time a report is scheduled to run.

38) Export an Analyzer report as a JSON file through a URL

You can now export an Analyzer report as a JSON file from a Pentaho repository through a URL, which can be useful when you want to export reports from a different scheduler.