Obtaining Accurate Predictions using Ensemble Learning Techniques

I. Introduction

Ensemble methods are techniques that create multiple models and then combine them to produce improved results. Ensemble methods usually produces more accurate solutions than a single model would. In this blog, I will cover different Ensemble Learning techniques and applications of it.

II. Concepts of Ensemble Learning

Before we proceed with different techniques used in Ensemble Learning, let’s understand the concept of ensemble learning with an example. Suppose you would want to go to a new restaurant and you zeroed in on a new restaurant which you are unaware of and you would like to get a preliminary feedback of the restaurant you have chosen before going for it. What are the possible ways by which you can do that?

A:You may ask one of your friends to rate the restaurant for you.

B:Another way could be by asking 5 colleagues of yours to rate the restaurant.

C:How about asking 50 people to rate the restaurant?

The responses, in Case – C, would be more generalized and diversified since now you have people with different sets of skills. And as it turns out this is a better approach to get honest feedback ratings than the previous cases we saw. With these examples, you can infer that a diverse group of people are likely to make better decisions as compared to individuals. Similar is true for a diverse set of models in comparison to single models. This diversification in Machine Learning is achieved by a technique called Ensemble Learning.

Simple Ensemble Techniques:

Voting and Averaging are two of the easiest ensemble methods. They are both easy to understand and implement. Voting is used for classification and averaging is used for regression.

- Voting Ensemble – The voting method is generally used for classification problems. In this technique, multiple models are used to make predictions for each data point. The predictions by each model are considered as a ‘vote’ and the predictions which get from the majority of the votes are used as the final prediction.

- Averaging Ensemble – Similar to the voting technique, multiple predictions are made for each data point in Averaging. In this method, we take an average of predictions from all the models and use it to make the final prediction. Averaging can be used for making predictions in regression problems or while calculating probabilities for classification problems.

Advanced Ensemble Techniques:

- Stacking Ensemble – Stacking is an ensemble learning technique that uses predictions from multiple models (for example Decision Tree, K-NN or SVM) to build a new model. This model is used for making predictions on the test set.

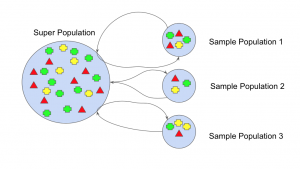

- Bagging Ensemble – The idea behind bagging is combining the results of multiple models (for instance, all decision trees) to get a generalized result. Bootstrapping is a sampling technique in which we create subsets of observations from the original dataset, with replacement. The size of the subsets is the same as the size of the original set. Bagging (or Bootstrap Aggregating) technique uses these subsets (bags) to get a fair idea of the distribution (complete set). The size of subsets created for bagging may be less than the original set.

- Boosting Ensemble – Boosting is a sequential process, where each subsequent model attempts to correct the errors of the previous model. The succeeding models are dependent on the previous model.

III. Conclusion

Ensemble Methods mostly are used in winning machine learning competitions by devising sophisticated algorithms and producing results with high accuracy, it is often not preferred in the industries where interpretability is more important. However, the effectiveness of these methods are undeniable, and their benefits in appropriate applications can be huge. In fields such as healthcare, even the smallest amount of improvement in the accuracy of machine learning algorithms can be something truly valuable.